Dear Holistics Community,

We’ve been talking to many data folks, and we know the headache of dealing with unreliable & inaccurate data after metric updates. That’s why we’re experimenting with a new research project to tackle this data accuracy challenge.

We need your feedback and ideas to help shape this innovative project.

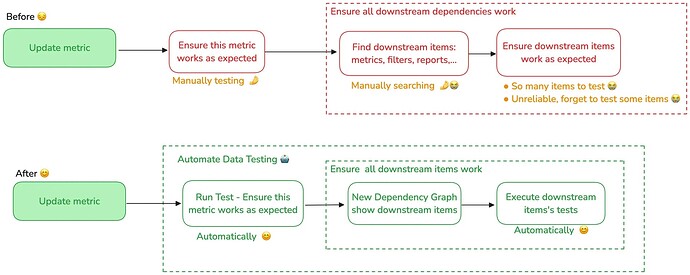

The Problem: When updating a metric could cause broken dependencies (and many headaches)

When analysts update a metric, they must ensure Reliability:

- Identifying how changes impact output data

- Ensuring no disruptions occur in downstream elements such as other metrics, filters, and reports.

- Preventing runtime errors such as AQL/SQL errors.

Currently, these steps rely on manual effort or a cumbersome review process. Since the metric is a complex chain of calculation, It’s not just about verifying your changes—you also need to ensure they don’t disrupt others’ work. This approach is both exhausting and time-consuming.

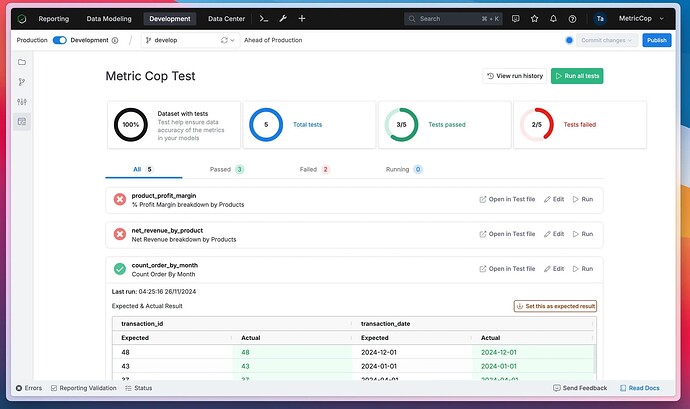

Solution: What if you can run Data tests on metrics in Holistics?

![]() Please note that this is a research prototype, and the final feature may differ from what is described here. The availability timeline is also subject to change.

Please note that this is a research prototype, and the final feature may differ from what is described here. The availability timeline is also subject to change.

High-level flow

Manual testing vs automation data testing

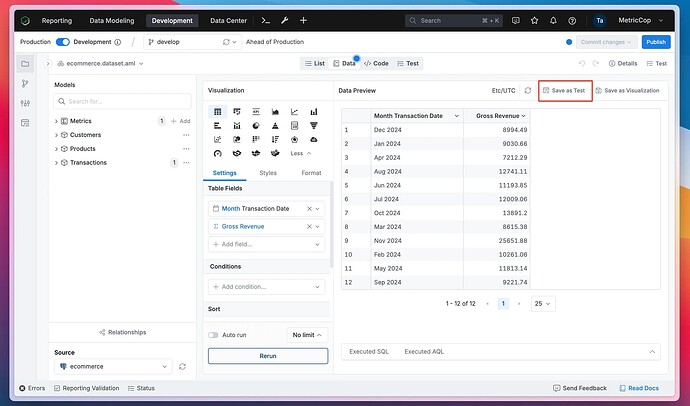

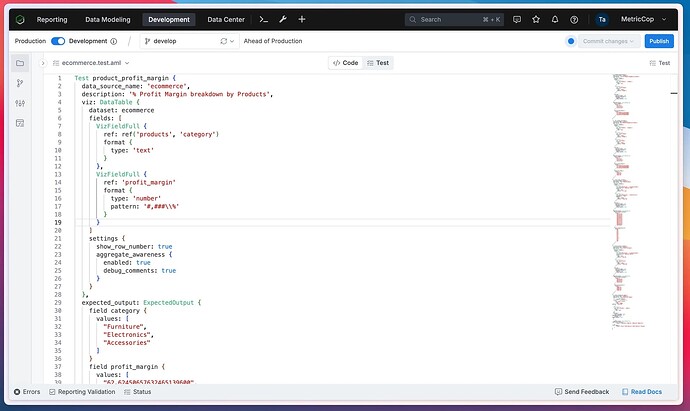

Data Testing

-

Identifying how changes impact output data

-

Easily track how the data changes after updates by capturing the current output.

-

Whenever a metric is updated, run the test to identify how your output data changed. These changes could reflect expected updates or highlight errors caused by incorrect logic.

-

-

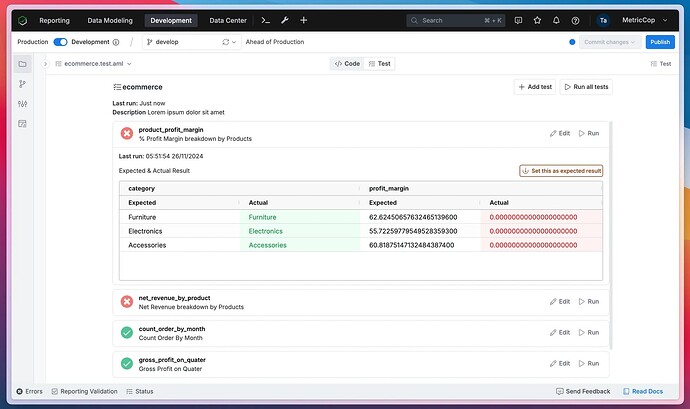

Testing Downstream Dependencies: Ensuring no unexpected changes in downstream elements such as other metrics, filters, and reports.

-

Test Report - Coverage & Status Overview: gain visibility & overview of your project’s data quality by centralizing all test results in one view.

Further Consideration for Improvement:

- CI/CD Integration: Automatically run Data Test by seamlessly integrating with CI/CD workflow via API, GitHub Action

- Automatically generate test cases for your metrics with AI-powered assistance

- Dashboard Testing: Generate test for all widgets, integration test (filter, cross-filtering), user-attributes,…

We genuinely hope that this experiment can enhance the quality of your data. We would love to hear your feedback and ideas. Your input is invaluable as we refine and expand this initiative.